#kubernetes administration

Explore tagged Tumblr posts

Text

Top Kubeadm commands to Manage your Kubernetes cluster

Top Kubeadm commands to Manage your Kubernetes cluster #kubernetes #kubeadm #kubernetesmanagement #kubernetesadministration #kubernetesconfig #k8stools #topkubeadmcommands #virtualizationhowto #devops #homelab #selfhosted #containers #containerd #docker

Kubeadm is a command line tool for managing and configuring Kubernetes clusters for development or production. This guide will look at the top kubeadm commands to manage your Kubernetes cluster and what you need to know. Table of contents1. Installing Kubeadm2. Kubeadm clusters initialization and configuration:3. Adding worker nodes4. Upgrading your Kubernetes clusterApplying the upgrade5.…

View On WordPress

0 notes

Text

Navigating Kubernetes Certification: Your Path to Cloud-Native Mastery

Kubernetes certification validates expertise in container orchestration. It demonstrates a deep understanding of Kubernetes, enabling efficient application deployment and management. These certifications, such as CKA, CKAD, and others, are highly respected in the IT industry and are essential for professionals aiming to excel in the world of cloud-native application development.

#kubernetes administrator certification#kubernetes aws#kubernetes certification#kubernetes deployment

0 notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

i opened up the Files app in my phone to read the kubernetes certified administrator curriculum pdf and it opened to this

4 notes

·

View notes

Text

Journey to Devops

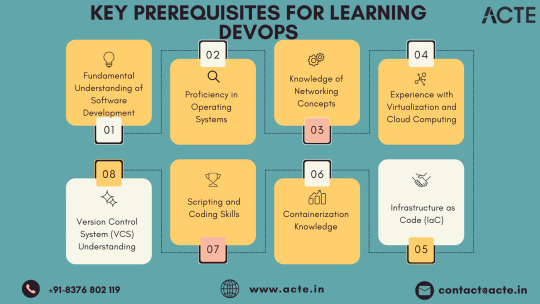

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

DevOps Landscape: Building Blocks for a Seamless Transition

In the dynamic realm where software development intersects with operations, the role of a DevOps professional has become instrumental. For individuals aspiring to make the leap into this dynamic field, understanding the key building blocks can set the stage for a successful transition. While there are no rigid prerequisites, acquiring foundational skills and knowledge areas becomes pivotal for thriving in a DevOps role.

1. Embracing the Essence of Software Development: At the core of DevOps lies collaboration, making it essential for individuals to have a fundamental understanding of software development processes. Proficiency in coding practices, version control, and the collaborative nature of development projects is paramount. Additionally, a solid grasp of programming languages and scripting adds a valuable dimension to one's skill set.

2. Navigating System Administration Fundamentals: DevOps success is intricately linked to a foundational understanding of system administration. This encompasses knowledge of operating systems, networks, and infrastructure components. Such familiarity empowers DevOps professionals to adeptly manage and optimize the underlying infrastructure supporting applications.

3. Mastery of Version Control Systems: Proficiency in version control systems, with Git taking a prominent role, is indispensable. Version control serves as the linchpin for efficient code collaboration, allowing teams to track changes, manage codebases, and seamlessly integrate contributions from multiple developers.

4. Scripting and Automation Proficiency: Automation is a central tenet of DevOps, emphasizing the need for scripting skills in languages like Python, Shell, or Ruby. This skill set enables individuals to automate repetitive tasks, fostering more efficient workflows within the DevOps pipeline.

5. Embracing Containerization Technologies: The widespread adoption of containerization technologies, exemplified by Docker, and orchestration tools like Kubernetes, necessitates a solid understanding. Mastery of these technologies is pivotal for creating consistent and reproducible environments, as well as managing scalable applications.

6. Unveiling CI/CD Practices: Continuous Integration and Continuous Deployment (CI/CD) practices form the beating heart of DevOps. Acquiring knowledge of CI/CD tools such as Jenkins, GitLab CI, or Travis CI is essential. This proficiency ensures the automated execution of code testing, integration, and deployment processes, streamlining development pipelines.

7. Harnessing Infrastructure as Code (IaC): Proficiency in Infrastructure as Code (IaC) tools, including Terraform or Ansible, constitutes a fundamental aspect of DevOps. IaC facilitates the codification of infrastructure, enabling the automated provisioning and management of resources while ensuring consistency across diverse environments.

8. Fostering a Collaborative Mindset: Effective communication and collaboration skills are non-negotiable in the DevOps sphere. The ability to seamlessly collaborate with cross-functional teams, spanning development, operations, and various stakeholders, lays the groundwork for a culture of collaboration essential to DevOps success.

9. Navigating Monitoring and Logging Realms: Proficiency in monitoring tools such as Prometheus and log analysis tools like the ELK stack is indispensable for maintaining application health. Proactive monitoring equips teams to identify issues in real-time and troubleshoot effectively.

10. Embracing a Continuous Learning Journey: DevOps is characterized by its dynamic nature, with new tools and practices continually emerging. A commitment to continuous learning and adaptability to emerging technologies is a fundamental trait for success in the ever-evolving field of DevOps.

In summary, while the transition to a DevOps role may not have rigid prerequisites, the acquisition of these foundational skills and knowledge areas becomes the bedrock for a successful journey. DevOps transcends being a mere set of practices; it embodies a cultural shift driven by collaboration, automation, and an unwavering commitment to continuous improvement. By embracing these essential building blocks, individuals can navigate their DevOps journey with confidence and competence.

5 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

Secure Your Hybrid Cloud with Windows Server 2022 Standard

Building a Fortress: The Future of Hybrid Cloud Security with Windows Server 2022

In today's rapidly evolving digital landscape, securing your hybrid cloud environment is more critical than ever. Windows Server 2022 Standard emerges as a robust foundation, blending innovative security features with flexible management capabilities to empower businesses of all sizes. This powerful server OS is designed to meet the complex demands of modern IT infrastructure, ensuring your data remains protected while maintaining seamless connectivity across on-premises and cloud environments.

One of the standout features of Windows Server 2022 Standard is its secured-core server capabilities. This technology integrates hardware, firmware, and software security measures to create a resilient fortress against cyber threats. By leveraging features like Secure Boot, hardware root of trust, and virtualization-based security, organizations can significantly reduce the attack surface and safeguard critical assets from malicious intrusions.

Furthermore, Windows Server 2022 enhances multi-layer security through advanced threat protection mechanisms. It offers improved encryption protocols, real-time security monitoring, and streamlined patch management, enabling IT teams to identify vulnerabilities and respond swiftly to emerging threats. This comprehensive security approach ensures that your hybrid cloud remains resilient against increasingly sophisticated cyberattacks.

Managing hybrid environments has never been easier thanks to the improved hybrid server management tools integrated into Windows Server 2022. The platform provides a unified management experience, allowing administrators to control both on-premises and cloud resources efficiently. Features like Azure Arc integration facilitate seamless deployment, monitoring, and updating of servers across diverse environments, reducing operational complexity and boosting productivity.

For organizations leveraging containerization, Windows Server 2022 offers significant improvements in windows containers. Enhanced support for Kubernetes, improved isolation, and faster container startup times help developers deploy applications swiftly and securely. This ensures that your development pipeline remains agile and responsive to market needs, all while maintaining a high level of security.

Investing in the right licensing is crucial to unlock the full potential of Windows Server 2022 Standard. For competitive pricing and licensing options, explore the windows server 2022 standard license price. This license provides the essential features needed to build a secure, scalable, and efficient hybrid cloud environment.

In conclusion, Windows Server 2022 Standard is more than just an operating system; it’s a strategic enabler for modern enterprises aiming to harness the power of hybrid cloud while maintaining the highest security standards. Embrace this innovative platform to future-proof your IT infrastructure, safeguard your data, and empower your business to thrive in a digital-first world.

0 notes

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In the era of cloud-native transformation, data is the fuel powering everything from mission-critical enterprise apps to real-time analytics platforms. However, as Kubernetes adoption grows, many organizations face a new set of challenges: how to manage persistent storage efficiently, reliably, and securely across distributed environments.

To solve this, Red Hat OpenShift Data Foundation (ODF) emerges as a powerful solution — and the DO370 training course is designed to equip professionals with the skills to deploy and manage this enterprise-grade storage platform.

🔍 What is Red Hat OpenShift Data Foundation?

OpenShift Data Foundation is an integrated, software-defined storage solution that delivers scalable, resilient, and cloud-native storage for Kubernetes workloads. Built on Ceph and Rook, ODF supports block, file, and object storage within OpenShift, making it an ideal choice for stateful applications like databases, CI/CD systems, AI/ML pipelines, and analytics engines.

🎯 Why Learn DO370?

The DO370: Red Hat OpenShift Data Foundation course is specifically designed for storage administrators, infrastructure architects, and OpenShift professionals who want to:

✅ Deploy ODF on OpenShift clusters using best practices.

✅ Understand the architecture and internal components of Ceph-based storage.

✅ Manage persistent volumes (PVs), storage classes, and dynamic provisioning.

✅ Monitor, scale, and secure Kubernetes storage environments.

✅ Troubleshoot common storage-related issues in production.

🛠️ Key Features of ODF for Enterprise Workloads

1. Unified Storage (Block, File, Object)

Eliminate silos with a single platform that supports diverse workloads.

2. High Availability & Resilience

ODF is designed for fault tolerance and self-healing, ensuring business continuity.

3. Integrated with OpenShift

Full integration with the OpenShift Console, Operators, and CLI for seamless Day 1 and Day 2 operations.

4. Dynamic Provisioning

Simplifies persistent storage allocation, reducing manual intervention.

5. Multi-Cloud & Hybrid Cloud Ready

Store and manage data across on-prem, public cloud, and edge environments.

📘 What You Will Learn in DO370

Installing and configuring ODF in an OpenShift environment.

Creating and managing storage resources using the OpenShift Console and CLI.

Implementing security and encryption for data at rest.

Monitoring ODF health with Prometheus and Grafana.

Scaling the storage cluster to meet growing demands.

🧠 Real-World Use Cases

Databases: PostgreSQL, MySQL, MongoDB with persistent volumes.

CI/CD: Jenkins with persistent pipelines and storage for artifacts.

AI/ML: Store and manage large datasets for training models.

Kafka & Logging: High-throughput storage for real-time data ingestion.

👨🏫 Who Should Enroll?

This course is ideal for:

Storage Administrators

Kubernetes Engineers

DevOps & SRE teams

Enterprise Architects

OpenShift Administrators aiming to become RHCA in Infrastructure or OpenShift

🚀 Takeaway

If you’re serious about building resilient, performant, and scalable storage for your Kubernetes applications, DO370 is the must-have training. With ODF becoming a core component of modern OpenShift deployments, understanding it deeply positions you as a valuable asset in any hybrid cloud team.

🧭 Ready to transform your Kubernetes storage strategy? Enroll in DO370 and master Red Hat OpenShift Data Foundation today with HawkStack Technologies – your trusted Red Hat Certified Training Partner. For more details www.hawkstack.com

0 notes

Text

Q-AIM: Open Source Infrastructure for Quantum Computing

Q-AIM Quantum Access Infrastructure Management

Open-source Q-AIM for quantum computing infrastructure, management, and access.

The open-source, vendor-independent platform Q-AIM (Quantum Access Infrastructure Management) makes quantum computing hardware easier to buy, meeting this critical demand. It aims to ease quantum hardware procurement and use.

Important Q-AIM aspects discussed in the article:

Design and Execution Q-AIM may be installed on cloud servers and personal devices in a portable and scalable manner due to its dockerized micro-service design. This design prioritises portability, personalisation, and resource efficiency. Reduced memory footprint facilitates seamless scalability, making Q-AIM ideal for smaller server instances at cheaper cost. Dockerization bundles software for consistent performance across contexts.

Technology Q-AIM's powerful software design uses Docker and Kubernetes for containerisation and orchestration for scalability and resource control. Google Cloud and Kubernetes can automatically launch, scale, and manage containerised apps. Simple Node.js, Angular, and Nginx interfaces enable quantum gadget interaction. Version control systems like Git simplify code maintenance and collaboration. Container monitoring systems like Cadvisor monitor resource usage to ensure peak performance.

Benefits, Function Research teams can reduce technical duplication and operational costs with Q-AIM. It streamlines complex interactions and provides a common interface for communicating with the hardware infrastructure regardless of quantum computing system. The system reduces the operational burden of maintaining and integrating quantum hardware resources by merging access and administration, allowing researchers to focus on scientific discovery.

Priorities for Application and Research The Variational Quantum Eigensolver (VQE) algorithm is studied to demonstrate how Q-AIM simplifies hardware access for complex quantum calculations. In quantum chemistry and materials research, VQE is an essential quantum computation algorithm that approximates a molecule or material's ground state energy. Q-AIM researchers can focus on algorithm development rather than hardware integration.

Other Features QASM, a human-readable quantum circuit description language, was parsed by researchers. This simplifies algorithm translation into hardware executable instructions and quantum circuit manipulation. The project also understands that quantum computing errors are common and invests in scalable error mitigation measures to ensure accuracy and reliability. Per Google Cloud computing instance prices, the methodology considers cloud deployment costs to maximise cost-effectiveness and affect design decisions.

Q-AIM helps research teams and universities buy, run, and scale quantum computing resources, accelerating progress. Future research should improve resource allocation, job scheduling, and framework interoperability with more quantum hardware.

To conclude

The majority of the publications cover quantum computing, with a focus on Q-AIM (Quantum Access Infrastructure Management), an open-source software framework for managing and accessing quantum hardware. Q-AIM uses a dockerized micro-service architecture for scalable and portable deployment to reduce researcher costs and complexity.

Quantum algorithms like Variational Quantum Eigensolver (VQE) are highlighted, but the sources also address quantum machine learning, the quantum internet, and other topics. A unified and adaptable software architecture is needed to fully use quantum technology, according to the study.

#QAIM#quantumcomputing#quantumhardware#Kubernetes#GoogleCloud#quantumcircuits#VariationalQuantumEigensolver#machinelearning#News#Technews#Technology#TechnologyNews#Technologytrends#Govindhtech

0 notes

Text

Build a Future-Ready Tech Career with a DevOps Course in Pune

In today's rapidly evolving software industry, the demand for seamless collaboration between development and operations teams is higher than ever. DevOps, a combination of “Development” and “Operations,” has emerged as a powerful methodology to improve software delivery speed, quality, and reliability. If you’re looking to gain a competitive edge in the tech world, enrolling in a DevOps course in Pune is a smart move.

Why Pune is a Hub for DevOps Learning

Pune, often dubbed the “Oxford of the East,” is not only known for its educational excellence but also for being a thriving IT and startup hub. With major tech companies and global enterprises setting up operations here, the city offers abundant learning and employment opportunities. Choosing a DevOps course in Pune gives students access to industry-oriented training, hands-on project experience, and potential job placements within the local ecosystem.

Moreover, Pune’s cost-effective lifestyle and growing tech infrastructure make it an ideal city for both freshers and professionals aiming to upskill.

What You’ll Learn in a DevOps Course

A comprehensive DevOps course in Pune equips learners with a wide range of skills needed to automate and streamline software development processes. Most courses include:

Linux Fundamentals and Shell Scripting

Version Control Systems like Git & GitHub

CI/CD Pipeline Implementation using Jenkins

Containerization with Docker

Orchestration using Kubernetes

Cloud Services: AWS, Azure, or GCP

Infrastructure as Code (IaC) with Terraform or Ansible

Many training programs also include real-world projects, mock interviews, resume-building workshops, and certification preparation to help learners become job-ready.

Who Should Take This Course?

A DevOps course in Pune is designed for a wide audience—software developers, system administrators, IT operations professionals, and even students who want to step into cloud and automation roles. Basic knowledge of programming and Linux can be helpful, but many beginner-level courses start from the fundamentals and gradually build up to advanced concepts.

Whether you are switching careers or aiming for a promotion, DevOps offers a high-growth path with diverse opportunities.

Career Opportunities After Completion

Once you complete a DevOps course in Pune, a variety of career paths open up in IT and tech-driven industries. Some of the most in-demand roles include:

DevOps Engineer

Site Reliability Engineer (SRE)

Automation Engineer

Build and Release Manager

Cloud DevOps Specialist

These roles are not only in demand but also come with attractive salary packages and global career prospects. Companies in Pune and across India are actively seeking certified DevOps professionals who can contribute to scalable, automated, and efficient development cycles.

Conclusion

Taking a DevOps course in Pune https://www.apponix.com/devops-certification/DevOps-Training-in-Pune.html is more than just an educational step—it's a career-transforming investment. With a balanced mix of theory, tools, and practical exposure, you’ll be well-equipped to tackle real-world DevOps challenges. Pune’s dynamic tech landscape offers a strong launchpad for anyone looking to master DevOps and step confidently into the future of IT.

0 notes

Text

Unlocking SRE Success: Roles and Responsibilities That Matter

In today’s digitally driven world, ensuring the reliability and performance of applications and systems is more critical than ever. This is where Site Reliability Engineering (SRE) plays a pivotal role. Originally developed by Google, SRE is a modern approach to IT operations that focuses strongly on automation, scalability, and reliability.

But what exactly do SREs do? Let’s explore the key roles and responsibilities of a Site Reliability Engineer and how they drive reliability, performance, and efficiency in modern IT environments.

🔹 What is a Site Reliability Engineer (SRE)?

A Site Reliability Engineer is a professional who applies software engineering principles to system administration and operations tasks. The main goal is to build scalable and highly reliable systems that function smoothly even during high demand or failure scenarios.

🔹 Core SRE Roles

SREs act as a bridge between development and operations teams. Their core responsibilities are usually grouped under these key roles:

1. Reliability Advocate

Ensures high availability and performance of services

Implements Service Level Objectives (SLOs), Service Level Indicators (SLIs), and Service Level Agreements (SLAs)

Identifies and removes reliability bottlenecks

2. Automation Engineer

Automates repetitive manual tasks using tools and scripts

Builds CI/CD pipelines for smoother deployments

Reduces human error and increases deployment speed

3. Monitoring & Observability Expert

Sets up real-time monitoring tools like Prometheus, Grafana, and Datadog

Implements logging, tracing, and alerting systems

Proactively detects issues before they impact users

4. Incident Responder

Handles outages and critical incidents

Leads root cause analysis (RCA) and postmortems

Builds incident playbooks for faster recovery

5. Performance Optimizer

Analyzes system performance metrics

Conducts load and stress testing

Optimizes infrastructure for cost and performance

6. Security and Compliance Enforcer

Implements security best practices in infrastructure

Ensures compliance with industry standards (e.g., ISO, GDPR)

Coordinates with security teams for audits and risk management

7. Capacity Planner

Forecasts traffic and resource needs

Plans for scaling infrastructure ahead of demand

Uses tools for autoscaling and load balancing

🔹 Day-to-Day Responsibilities of an SRE

Here are some common tasks SREs handle daily:

Deploying code with zero downtime

Troubleshooting production issues

Writing automation scripts to streamline operations

Reviewing infrastructure changes

Managing Kubernetes clusters or cloud services (AWS, GCP, Azure)

Performing system upgrades and patches

Running game days or chaos engineering practices to test resilience

🔹 Tools & Technologies Commonly Used by SREs

Monitoring: Prometheus, Grafana, ELK Stack, Datadog

Automation: Terraform, Ansible, Chef, Puppet

CI/CD: Jenkins, GitLab CI, ArgoCD

Containers & Orchestration: Docker, Kubernetes

Cloud Platforms: AWS, Google Cloud, Microsoft Azure

Incident Management: PagerDuty, Opsgenie, VictorOps

🔹 Why SRE Matters for Modern Businesses

Reduces system downtime and increases user satisfaction

Improves deployment speed without compromising reliability

Enables proactive problem solving through observability

Bridges the gap between developers and operations

Drives cost-effective scaling and infrastructure optimization

🔹 Final Thoughts

Site Reliability Engineering roles and responsibilities are more than just monitoring systems—it’s about building a resilient, scalable, and efficient infrastructure that keeps digital services running smoothly. With a blend of coding, systems knowledge, and problem-solving skills, SREs play a crucial role in modern DevOps and cloud-native environments.

📥 Click Here: Site Reliability Engineering certification training program

0 notes

Text

Mastering the Cloud Exploring Kubernetes on Azure, and Linux Training

Container Orchestration System Software (COSS) has gained immense popularity in India due to its ability to simplify and automate the deployment, scaling, and management of containerized applications. Kubernetes on Azure, an open-source platform designed to automate deploying, scaling, and operating application containers, is one of the leading COSS platforms in India. For more details visit here:-> https://medium.com/@cossindiaa/mastering-the-cloud-exploring-kubernetes-on-azure-and-linux-training-e825daa38396

#kubernetes on azure#linux architecture#linux boot process#linux training#red hat certified#red hat certifications#red hat certified engineer#red hat certified system administrator#red hat certification

0 notes

Text

Kubernetes Tutorials | Waytoeasylearn

Learn how to become a Certified Kubernetes Administrator (CKA) with this all-in-one Kubernetes course. It is suitable for complete beginners as well as experienced DevOps engineers. This practical, hands-on class will teach you how to understand Kubernetes architecture, deploy and manage applications, scale services, troubleshoot issues, and perform admin tasks. It covers everything you need to confidently pass the CKA exam and run containerized apps in production.

Learn Kubernetes the easy way! 🚀 Best tutorials at Waytoeasylearn for mastering Kubernetes and cloud computing efficiently.➡️ Learn Now

Whether you are studying for the CKA exam or want to become a Kubernetes expert, this course offers step-by-step lessons, real-life examples, and labs focused on exam topics. You will learn from Kubernetes professionals and gain skills that employers are looking for.

Key Learning Outcomes: Understand Kubernetes architecture, components, and key ideas. Deploy, scale, and manage containerized apps on Kubernetes clusters. Learn to use kubectl, YAML files, and troubleshoot clusters. Get familiar with pods, services, deployments, volumes, namespaces, and RBAC. Set up and run production-ready Kubernetes clusters using kubeadm. Explore advanced topics like rolling updates, autoscaling, and networking. Build confidence with real-world labs and practice exams. Prepare for the CKA exam with helpful tips, checklists, and practice scenarios.

Who Should Take This Course: Aspiring CKA candidates. DevOps engineers, cloud engineers, and system admins. Software developers moving into cloud-native work. Anyone who wants to master Kubernetes for real jobs.

1 note

·

View note

Text

DevOps Training Institute in Indore – Your Gateway to Continuous Delivery & Automation

Accelerate Your IT Career with DevOps

In today’s fast-paced IT ecosystem, businesses demand faster deployments, automation, and collaborative workflows. DevOps is the solution—and becoming proficient in this powerful methodology can drastically elevate your career. Enroll at Infograins TCS, the leading DevOps Training Institute in Indore, to gain practical skills in integration, deployment, containerization, and continuous monitoring with real-time tools and cloud technologies.

What You’ll Learn in Our DevOps Course

Our specialized DevOps course in Indore blends development and operations practices to equip students with practical expertise in CI/CD pipelines, Jenkins, Docker, Kubernetes, Ansible, Git, AWS, and monitoring tools like Nagios and Prometheus. The course is crafted by industry experts to ensure learners gain a hands-on understanding of real-world DevOps applications in cloud-based environments.

Key Benefits – Why Our DevOps Training Stands Out

At Infograins TCS, our DevOps training in Indore offers learners several advantages:

In-depth coverage of popular DevOps tools and practices.

Hands-on projects on automation and cloud deployment.

Industry-aligned curriculum updated with the latest trends.

Internship and job assistance for eligible students. This ensures you not only gain certification but walk away with project experience that matters in the real world.

Why Choose Us – A Trusted DevOps Training Institute in Indore

Infograins TCS has earned its reputation as a reliable DevOps Training Institute in Indore through consistent quality and commitment to excellence. Here’s what sets us apart:

Professional instructors with real-world DevOps experience.

100% practical learning through case studies and real deployments.

Personalized mentoring and career guidance.

Structured learning paths tailored for both beginners and professionals. Our focus is on delivering value that goes beyond traditional classroom learning.

Certification Programs at Infograins TCS

We provide industry-recognized certifications that validate your knowledge and practical skills in DevOps. This credential is a powerful tool for standing out in job applications and interviews. After completing the DevOps course in Indore, students receive a certificate that reflects their readiness for technical roles in the IT industry.

After Certification – What Comes Next?

Once certified, students can pursue DevOps-related roles such as DevOps Engineer, Release Manager, Automation Engineer, and Site Reliability Engineer. We also help students land internships and jobs through our strong network of hiring partners, real-time project exposure, and personalized support. Our DevOps training in Indore ensures you’re truly job-ready.

Explore Our More Courses – Build a Broader Skill Set

Alongside our flagship DevOps course, Infograins TCS also offers:

Cloud Computing with AWS

Python Programming & Automation

Software Testing – Manual & Automation

Full Stack Web Development

Data Science and Machine Learning These programs complement DevOps skills and open additional career opportunities in tech.

Why We Are the Right Learning Partner

At Infograins TCS, we don’t just train—we mentor, guide, and prepare you for a successful IT journey. Our career-centric approach, live-project integration, and collaborative learning environment make us the ideal destination for DevOps training in Indore. When you partner with us, you’re not just learning tools—you’re building a future.

FAQs – Frequently Asked Questions

1. Who can enroll in the DevOps course? Anyone with a basic understanding of Linux, networking, or software development can join. This course is ideal for freshers, IT professionals, and system administrators.

2. Will I get certified after completing the course? Yes, upon successful completion of the training and evaluation, you’ll receive an industry-recognized certification from Infograins TCS.

3. Is this DevOps course available in both online and offline modes? Yes, we offer both classroom and online training options to suit your schedule and convenience.

4. Do you offer placement assistance? Absolutely! We provide career guidance, interview preparation, and job/internship opportunities through our dedicated placement cell.

5. What tools will I learn in this DevOps course? You will gain hands-on experience with tools such as Jenkins, Docker, Kubernetes, Ansible, Git, AWS, and monitoring tools like Nagios and Prometheus.

Join the Best DevOps Training Institute in Indore

If you're serious about launching or advancing your career in DevOps, there’s no better place than Infograins TCS – your trusted DevOps Training Institute in Indore. With our project-based learning, expert guidance, and certification support, you're well on your way to becoming a DevOps professional in high demand.

0 notes

Text

52013l4 in Modern Tech: Use Cases and Applications

In a technology-driven world, identifiers and codes are more than just strings—they define systems, guide processes, and structure workflows. One such code gaining prominence across various IT sectors is 52013l4. Whether it’s in cloud services, networking configurations, firmware updates, or application builds, 52013l4 has found its way into many modern technological environments. This article will explore the diverse use cases and applications of 52013l4, explaining where it fits in today’s digital ecosystem and why developers, engineers, and system administrators should be aware of its implications.

Why 52013l4 Matters in Modern Tech

In the past, loosely defined build codes or undocumented system identifiers led to chaos in large-scale environments. Modern software engineering emphasizes observability, reproducibility, and modularization. Codes like 52013l4:

Help standardize complex infrastructure.

Enable cross-team communication in enterprises.

Create a transparent map of configuration-to-performance relationships.

Thus, 52013l4 isn’t just a technical detail—it’s a tool for governance in scalable, distributed systems.

Use Case 1: Cloud Infrastructure and Virtualization

In cloud environments, maintaining structured builds and ensuring compatibility between microservices is crucial. 52013l4 may be used to:

Tag versions of container images (like Docker or Kubernetes builds).

Mark configurations for network load balancers operating at Layer 4.

Denote system updates in CI/CD pipelines.

Cloud providers like AWS, Azure, or GCP often reference such codes internally. When managing firewall rules, security groups, or deployment scripts, engineers might encounter a 52013l4 identifier.

Use Case 2: Networking and Transport Layer Monitoring

Given its likely relation to Layer 4, 52013l4 becomes relevant in scenarios involving:

Firewall configuration: Specifying allowed or blocked TCP/UDP ports.

Intrusion detection systems (IDS): Tracking abnormal packet flows using rules tied to 52013l4 versions.

Network troubleshooting: Tagging specific error conditions or performance data by Layer 4 function.

For example, a DevOps team might use 52013l4 as a keyword to trace problems in TCP connections that align with a specific build or configuration version.

Use Case 3: Firmware and IoT Devices

In embedded systems or Internet of Things (IoT) environments, firmware must be tightly versioned and managed. 52013l4 could:

Act as a firmware version ID deployed across a fleet of devices.

Trigger a specific set of configurations related to security or communication.

Identify rollback points during over-the-air (OTA) updates.

A smart home system, for instance, might roll out firmware_52013l4.bin to thermostats or sensors, ensuring compatibility and stable transport-layer communication.

Use Case 4: Software Development and Release Management

Developers often rely on versioning codes to track software releases, particularly when integrating network communication features. In this domain, 52013l4 might be used to:

Tag milestones in feature development (especially for APIs or sockets).

Mark integration tests that focus on Layer 4 data flow.

Coordinate with other teams (QA, security) based on shared identifiers like 52013l4.

Use Case 5: Cybersecurity and Threat Management

Security engineers use identifiers like 52013l4 to define threat profiles or update logs. For instance:

A SIEM tool might generate an alert tagged as 52013l4 to highlight repeated TCP SYN floods.

Security patches may address vulnerabilities discovered in the 52013l4 release version.

An organization’s SOC (Security Operations Center) could use 52013l4 in internal documentation when referencing a Layer 4 anomaly.

By organizing security incidents by version or layer, organizations improve incident response times and root cause analysis.

Use Case 6: Testing and Quality Assurance

QA engineers frequently simulate different network scenarios and need clear identifiers to catalog results. Here’s how 52013l4 can be applied:

In test automation tools, it helps define a specific test scenario.

Load-testing tools like Apache JMeter might reference 52013l4 configurations for transport-level stress testing.

Bug-tracking software may log issues under the 52013l4 build to isolate issues during regression testing.

What is 52013l4?

At its core, 52013l4 is an identifier, potentially used in system architecture, internal documentation, or as a versioning label in layered networking systems. Its format suggests a structured sequence: “52013” might represent a version code, build date, or feature reference, while “l4” is widely interpreted as Layer 4 of the OSI Model — the Transport Layer.Because of this association, 52013l4 is often seen in contexts that involve network communication, protocol configuration (e.g., TCP/UDP), or system behavior tracking in distributed computing.

FAQs About 52013l4 Applications

Q1: What kind of systems use 52013l4? Ans. 52013l4 is commonly used in cloud computing, networking hardware, application development environments, and firmware systems. It's particularly relevant in Layer 4 monitoring and version tracking.

Q2: Is 52013l4 an open standard? Ans. No, 52013l4 is not a formal standard like HTTP or ISO. It’s more likely an internal or semi-standardized identifier used in technical implementations.

Q3: Can I change or remove 52013l4 from my system? Ans. Only if you fully understand its purpose. Arbitrarily removing references to 52013l4 without context can break dependencies or configurations.

Conclusion

As modern technology systems grow in complexity, having clear identifiers like 52013l4 ensures smooth operation, reliable communication, and maintainable infrastructures. From cloud orchestration to embedded firmware, 52013l4 plays a quiet but critical role in linking performance, security, and development efforts. Understanding its uses and applying it strategically can streamline operations, improve response times, and enhance collaboration across your technical teams.

0 notes